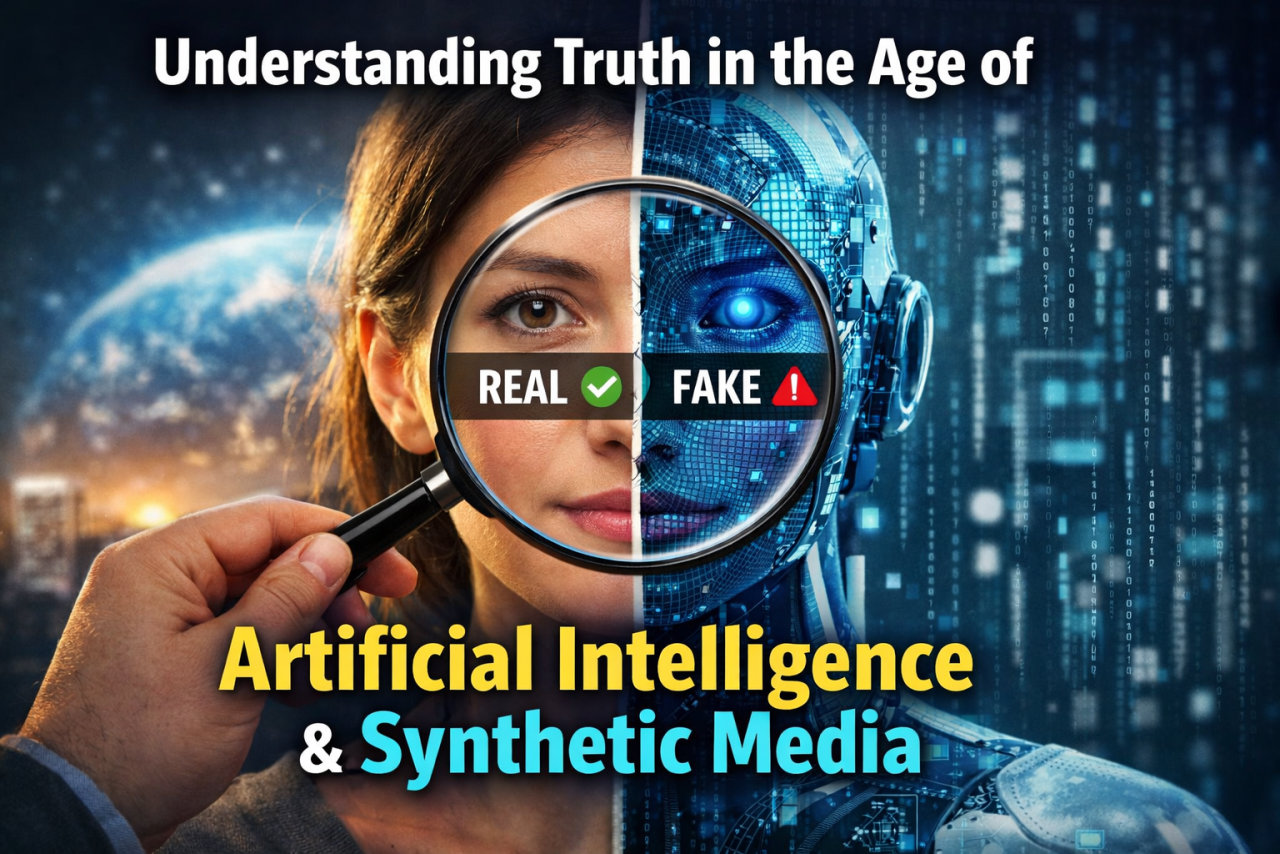

As OpenAI weighs loosening ChatGPT’s safeguards, a deeper battle is unfolding between profit and responsibility.

The recent firing of Ryan Beiermeister, a senior policy executive at OpenAI, has drawn attention to what appears to be a deep internal divide within the company behind ChatGPT. While OpenAI has stated that her January dismissal was due to “sexual discrimination against a male colleague” — an allegation Beiermeister has strongly denied — the timing has prompted wider questions about the company’s strategic direction.

At the centre of the controversy is a proposed feature reportedly referred to internally as “Adult Mode.” The feature, expected to be introduced in 2026, would relax existing restrictions that prevent ChatGPT from engaging in sexual or explicit conversations. Since its launch, ChatGPT has operated under strict content safeguards designed to limit harmful, explicit, or unsafe interactions. These guardrails have been a key part of OpenAI’s effort to position the chatbot as suitable for broad public and enterprise use.

However, leadership under Chief Executive Officer Sam Altman appears to be considering a shift. Altman has argued in public discussions that adult users should have the freedom to engage in more open conversations, suggesting that AI systems should be capable of responding to lawful adult requests. Supporters of this approach view it as an issue of user autonomy and product evolution in a competitive market.

Commercial pressures are also significant. Rivals in the artificial intelligence space, including xAI founded by Elon Musk, have positioned themselves as less restrictive alternatives. Some platforms that allow more “edgy” or lightly moderated interactions have reported strong user engagement. In an increasingly crowded AI marketplace, maintaining growth and investor confidence has become a strategic priority.

According to reports, Beiermeister, who led the product policy team, had raised concerns about the timing and readiness of such a feature. Her objections were reportedly focused on implementation risks rather than ideology.

One major concern relates to age verification. Digital platforms continue to struggle with reliably confirming users’ ages. If explicit AI interactions become available, ensuring that minors cannot bypass safeguards would become a critical challenge. Experts have long warned that online age-gating systems are often easy to circumvent.

Another issue involves mental health. Researchers have observed that advanced conversational AI systems can encourage strong emotional attachment among users. While many interactions are harmless, there is ongoing debate about the psychological impact of highly personalised, human-like systems. Introducing sexualised content could intensify emotional dependency or blur boundaries between digital simulation and real-world relationships.

The internal debate is also unfolding amid intense competitive pressure. OpenAI has publicly acknowledged the need to remain ahead in a rapidly advancing sector. Critics, however, warn that rapid feature expansion without robust safeguards may undermine public trust — particularly for a technology that is increasingly embedded in daily life.

The circumstances surrounding Beiermeister’s exit have further fuelled scrutiny. OpenAI maintains that its action was based on workplace conduct issues. Beiermeister, in comments to media outlets, has rejected the allegation and described it as unfounded. Supporters note her prior involvement in mentorship and diversity initiatives within the company, suggesting that her removal may be connected to broader strategic disagreements.

Her departure follows a number of high-profile exits from OpenAI in recent years, some involving employees who have emphasised safety, governance, and responsible deployment of artificial intelligence. While staff turnover is common in fast-growing technology firms, repeated departures linked to safety concerns have drawn public attention.

The broader question extends beyond one company. As AI systems become more advanced and more integrated into communication, education, and personal life, decisions about content boundaries carry significant social implications. Whether companies can balance commercial incentives with user protection remains a central issue in the governance of emerging technologies.

As OpenAI considers expanding the boundaries of what ChatGPT can discuss, the debate highlights a fundamental tension between market competition and long-term responsibility. The outcome of this internal struggle may shape not only the future of one product, but also public expectations about the limits and accountability of artificial intelligence.