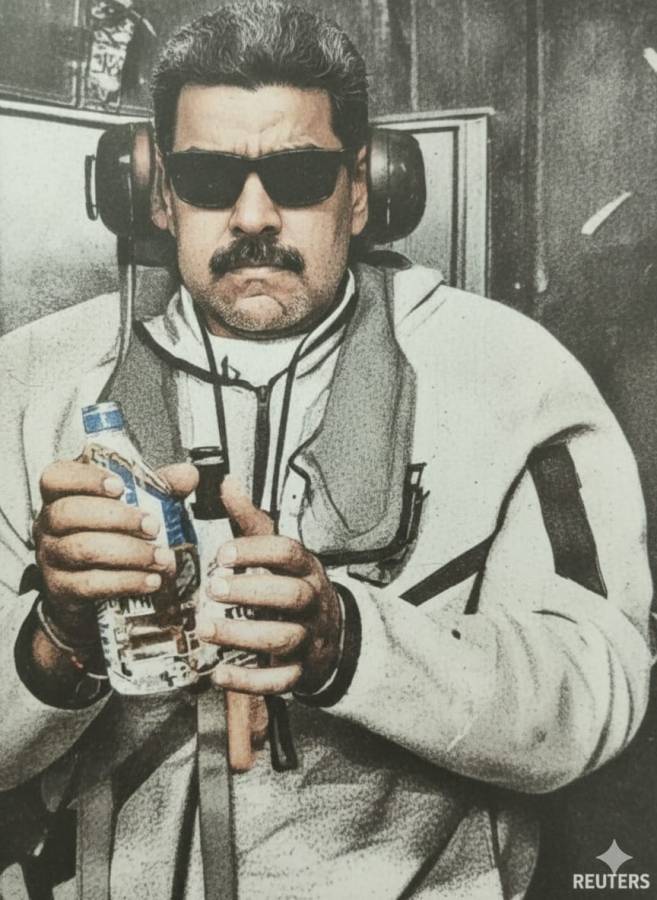

Before the first helicopter lifted off and before boots touched the ground, an algorithm was already at work — scanning signals, mapping risks, and quietly shaping the mission that would lead to the capture of Nicolás Maduro.

In the early hours of January 3, 2026, as American special operations forces moved with calculated precision in a high-stakes mission targeting Venezuelan leader Nicolás Maduro, another force was quietly at work — not in the skies or on the streets, but in streams of data. According to reports by The Wall Street Journal and Reuters, the U.S. military relied in part on Claude, an artificial intelligence model developed by Anthropic, to support the classified operation that ultimately led to Maduro’s capture and transfer to the United States to face federal charges.

The revelation marks a watershed moment. Artificial intelligence, long discussed as a future tool of warfare, is now firmly embedded in live military operations. While officials have declined to detail exactly how Claude was used, defense sources suggest it was accessed through systems integrated by Palantir Technologies, which has deep ties to U.S. defense and intelligence agencies. The arrangement reportedly allowed the AI system to operate within secure government networks.

Despite popular imagination, the AI was not commanding troops or directing gunfire. Its role was more cerebral but no less significant. Large language models like Claude are designed to process and synthesize enormous volumes of information in seconds — from intercepted communications and satellite imagery to logistical data and threat assessments. In a fluid and potentially volatile mission environment, such rapid analysis can mean the difference between success and catastrophe.

Military planners likely used AI-generated insights to sharpen situational awareness, map contingencies, and model “what-if” scenarios. Extraction routes, potential resistance patterns, and airspace vulnerabilities could be assessed and reassessed in near real time. Instead of replacing human decision-makers, the AI functioned as a cognitive multiplier, accelerating analysis and reducing the burden on intelligence teams working under immense pressure.

Yet the operation’s success has ignited a debate that extends far beyond Venezuela. Anthropic’s publicly stated policies prohibit the use of its AI systems for violence, weapons development, or surveillance. The company has positioned itself as a leader in AI safety, emphasizing strict guardrails to prevent misuse. Reports that its model supported a military raid — even in an analytical capacity — have raised difficult questions about how those policies are interpreted in national security contexts.

Inside Washington, tensions appear to be mounting. Defense officials argue that future conflicts will be defined not only by firepower but by information dominance. In that environment, limiting access to cutting-edge AI tools could leave the military strategically disadvantaged. The Pentagon is said to be pressing major AI firms, including Anthropic, OpenAI, and Google, to relax certain usage restrictions when operating on classified networks. Officials contend that the government should have access to these technologies for “all lawful purposes,” a phrase that encompasses a wide spectrum of military applications.

For tech companies, the dilemma is profound. Cooperation with the Defense Department can provide significant funding and influence, but it also risks backlash from employees, civil society groups, and international observers wary of autonomous or semi-autonomous warfare. Anthropic has maintained that it does not authorize the use of its models for fully autonomous weapons and insists that any government collaboration must adhere to its ethical standards.

The broader implications are difficult to ignore. Supporters of AI integration argue that such systems can reduce casualties by enabling smarter planning and minimizing operational blind spots. Faster intelligence synthesis can prevent miscalculations and help avoid civilian harm. In the Maduro operation, proponents point to the precision and apparent absence of U.S. casualties as evidence that AI-augmented planning enhances effectiveness.

Critics counter that even advisory systems shift the moral terrain of warfare. As AI systems take on more sophisticated analytical roles, the boundary between decision support and decision making may blur. Questions of accountability loom large: if an AI-generated assessment contributes to a flawed operation, who bears responsibility — the commander, the programmer, or the machine?

The capture of Nicolás Maduro may ultimately be remembered less for its geopolitical impact and more for what it signals about the future of conflict. Warfare is entering an era in which algorithms sit alongside generals in the chain of influence. The “Silicon Soldier” is no longer a metaphor but an operational reality.

Whether governments and technology firms can establish clear, enforceable limits on how far that partnership extends will shape the next chapter of global security. For now, one thing is certain that artificial intelligence has stepped onto the battlefield — not as a weapon itself, but as a powerful new instrument in the conduct of war.